22+ How To Read Parquet File

Web How to View Parquet File on Windows Machine How to Read Parquet File ADF Tutorial 2022 in this video we are going to learn How to View Parquet File on. Load a parquet object from the file path returning a DataFrame.

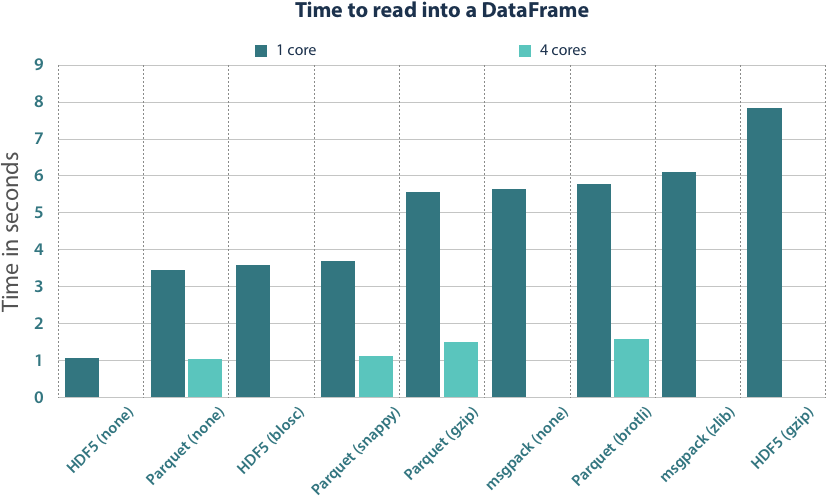

Efficient Dataframe Storage With Apache Parquet Blue Yonder Tech Blog

We do NOT save your data.

. Web You can use AWS Glue to read Parquet files from Amazon S3 and from streaming sources as well as write Parquet files to Amazon S3. Web The standard method to read any object jsonexcelhtml is the read_objectname. Web Spark SQL provides support for both reading and writing Parquet files that automatically capture the schema of the original data It also reduces data storage by.

Read_parquet path engine auto. Web The fastest way to read or view your parquet files online. This will read the Parquet file at the specified file path and return a DataFrame containing the.

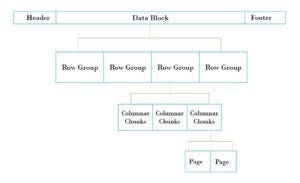

Metadata is at the end of the file. Use container client and get the list of blobs from the specified path. Web Here is an example of how you can read a CSV file from a URL and bind it to a DataGrid in NET using VB.

Insights in your parquet. Web Read a Parquet File. Web How to view Apache Parquet file in Windows.

Use list_blob function with prefixpart- which will get one. Parameters path str path object or file-like object. Web Read parquet files from partitioned directories.

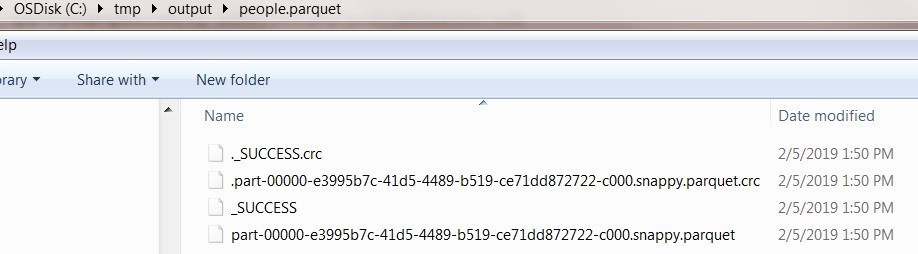

In article Data Partitioning Functions in Spark PySpark Deep Dive I showed how to create a directory structure. Select the drive you want to encrypt and follow the instructions on the screen. Web Read the parquet file from step3.

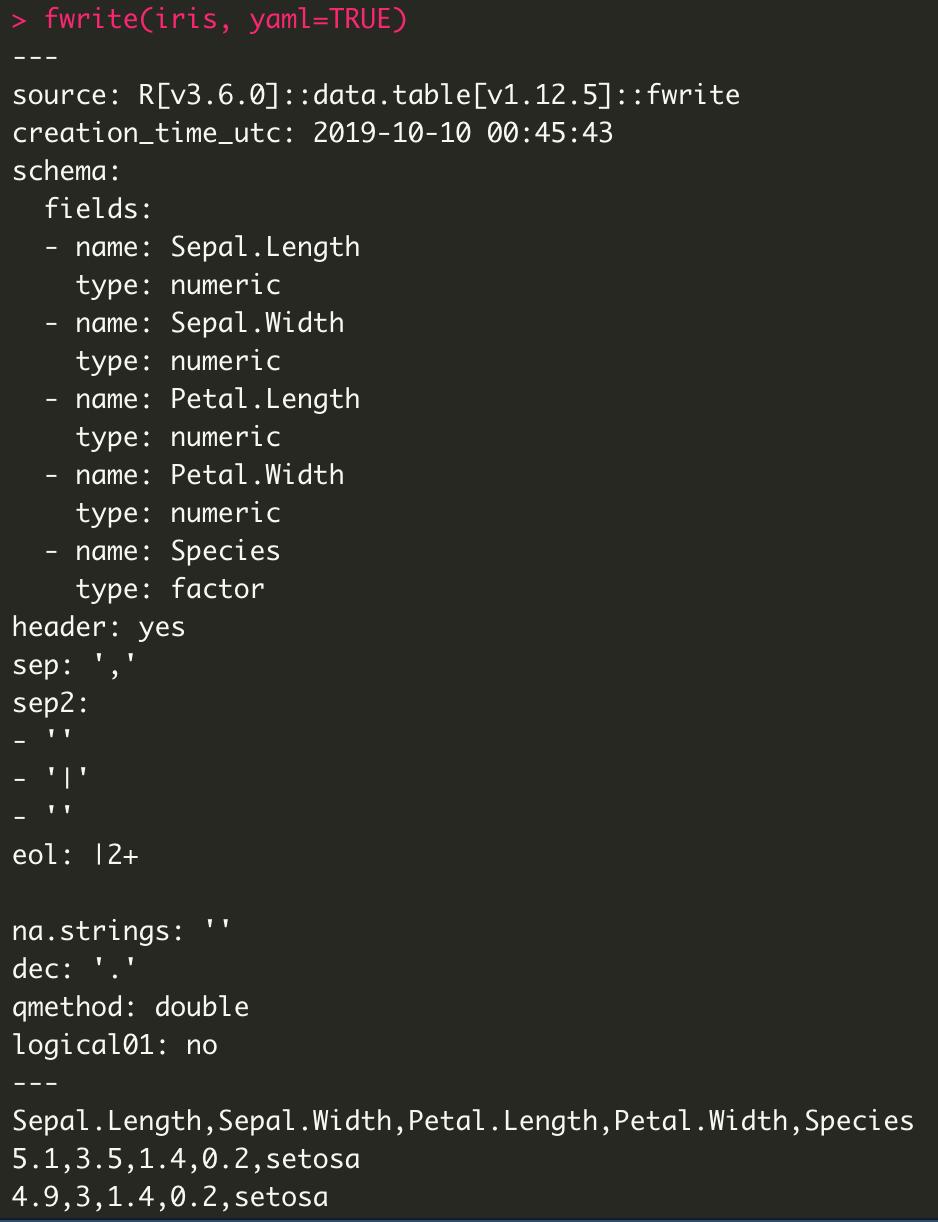

This example shows you how to read data from a Parquet file using Data Pipeline. A character file name or URI raw vector an Arrow input stream or a FileSystem with path SubTreeFileSystemIf a file name or URI an Arrow InputStream. Parquet file writing options.

Within your virtual environment in Python in either terminal or command line. Drag and drop parquet file here or Browse for file. In this demo code you are going to use.

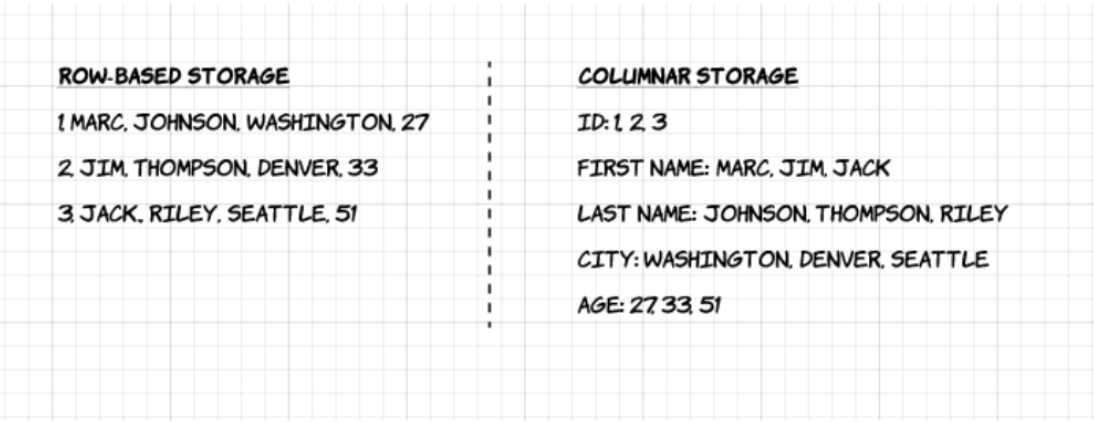

Efficient data retrieval efficient compression etc. Web Pyspark SQL provides methods to read Parquet file into DataFrame and write DataFrame to Parquet files parquet function from DataFrameReader and DataFrameWriter are. Web First we are going to need to install the Pandas library in Python.

You can read and write bzip and gzip. Web Next select Encrypt a non-system partitiondrive and select Next. Web If you need to deal with Parquet data bigger than memory the Tabular Datasets and partitioning is probably what you are looking for.

First youll need to add a reference to the SystemWeb. Read Parquet Files Using Fastparquet Engine in Python The parquet file is read using the. Web As the data is written to the parquet file lets read the file.

How Fast Is Reading Parquet File With Arrow Vs Csv With Pandas By Tirthajyoti Sarkar Towards Data Science

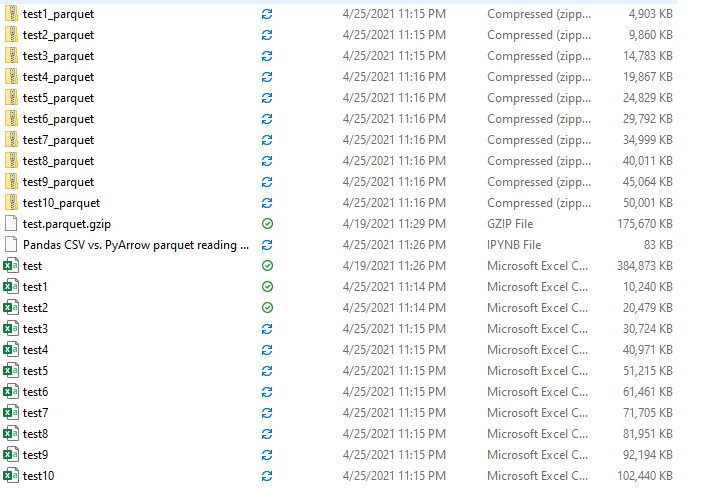

Hadoop Is It Better To Have One Large Parquet File Or Lots Of Smaller Parquet Files Stack Overflow

A Dive Into Apache Spark Parquet Reader For Small Size Files By Mageswaran D Medium

Convert Csv To Parquet File Using Python Stack Overflow

How Fast Is Reading Parquet File With Arrow Vs Csv With Pandas By Tirthajyoti Sarkar Towards Data Science

Awesome Csv Awesomecsv Twitter

Awesome Csv Awesomecsv Twitter

Reading And Writing The Apache Parquet Format Apache Arrow V10 0 1

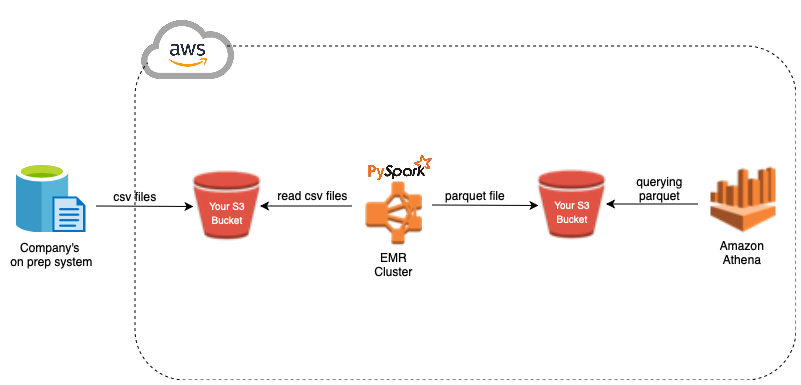

Efficient Data Storage With Apache Parquet In The Amazon Cloud By Ihor Shylo Medium

How To Read Write Parquet File Data In Apache Spark Parquet Apache Spark Youtube

How To Read Write Parquet File Data In Apache Spark Parquet Apache Spark Youtube

What Is The Parquet File Format Use Cases Benefits Upsolver

Spark Reading And Writing To Parquet Storage Format Youtube

Parquet Best Practices Discover Your Data Without Loading It By Kevin Li Jan 2023 Towards Data Science

Solved Parquet Data Duplication Cloudera Community 103235

Awesome Csv Awesomecsv Twitter

Pyspark Read And Write Parquet File Spark By Examples